Original Research

Evolving a conceptual framework and developing a new questionnaire for usability evaluation of blended learning programs in health professions education

Anish Arora1,

Charo Rodriguez1,2,

Tamara Carver2,

Laura Rojas-Rozo1,

Tibor Schuster1

Published online: September 6, 2021

1Department of Family Medicine, School of Medicine, Faculty of Medicine and Health Sciences, McGill University, Montreal, Québec, Canada

2Institute of Health Sciences Education, School of Medicine, Faculty of Medicine and Health Sciences, McGill University, Montreal, Québec, Canada

Corresponding Author: Charo Rodriguez, email charo.rodriguez@mcgill.ca

DOI: 10.26443/mjm.v20i1.961

Abstract

Background: Blended learning programs (BLPs) have been widely adopted across health professions education (HPE). To bolster their impact on learning outcomes, the usability of BLPs should be rigorously evaluated. However, there is a lack of reliable and validated tools to appraise this dimension of BLPs within HPE. The purpose of this investigation was to evolve a conceptual framework for usability evaluation in order to initially develop the Blended Learning Usability Evaluation – Questionnaire (BLUE-Q).

Methods: After the completion of a scoping review, we conducted a qualitative descriptive study with seven purposefully selected international experts in usability and learning program evaluation. Individual interviews were conducted via videoconferencing, transcribed verbatim, and analyzed through thematic analysis.

Results: Three themes were identified: (1) Consolidation of the multifaceted ISO definition of usability in BLPs within HPE; (2) Different facets of usability can assess different aspects of BLPs; (3) Quantitative and qualitative data are needed to assess the multifaceted nature of usability. The first theme adds nuance to a previously established HPE-focused usability framework, and introduces two new dimensions: ‘pedagogical usability’ and ‘learner motivation.’ The latter two provide guidance on structuring BLP evaluations within HPE. From this followed the development of the BLUE-Q, a new questionnaire that includes 55 Likert scale items and 6 open-ended questions.

Conclusion: Usability is an important dimension of BLPs and must be examined to improve the quality of these interventions in HPE. As such, we developed a new questionnaire, solidly grounded in theory and the expertise of international scholars, currently under validation.

Tags: Blended learning, Health professions education, Program evaluation, Questionnaire design, Usability

Introduction

This article documents the second of three phases for the development and preliminary validation of the Blended Learning Usability Evaluation – Questionnaire (BLUE-Q), specifically conceived to appraise the usability of blended learning programs (BLPs) in health professions education (HPE).

Traditionally, blended learning has been defined as a pedagogical approach that utilizes both face-to-face teaching and information technology. (1-3) To ascribe limits to this broad definition, scholars indicate that learning is truly blended when education is provided through online learning methods for approximately 30 to 79% of the program. (4) Any less than 30% of technology use would refer to technology-assisted learning and any more than 79% would refer to online learning. (4) As technology continues to advance, new models of teaching and learning such as hybrid or hyflex have been developed and have pushed us to reconsider what blended learning is. (5-9) However, to our understanding, what is common among recent definitions of BLPs from around the world is that the blend being referred to is not necessarily about “in person” and “technology-facilitated” learning, but rather about the use of both synchronous and asynchronous learning modalities in a program.

Despite heterogeneity in definitions, BLPs have been demonstrated as beneficial for learners as they enable them to tailor their educational experiences to their needs, and to some extent, provide learners with the opportunity to control the pace, time, and location of their learning. (1,10-17) BLPs empower learners and educators by using learning management systems, which can enable meaningful monitoring of learner progress. (2,10,11,17) Additionally, BLPs can provide a cost-saving potential for institutions. (16) With these benefits in mind, BLPs have been adopted at an increasing rate across HPE faculties and departments over the last decade. (17-20)

The COVID-19 pandemic and its related lockdown measures have accelerated this trend as some learners have been unable to receive traditional classroom education. (21-26) Interestingly, institutional willingness to continue adopting and developing their BLPs in HPE faculties and departments exists to some extent, even as pandemic-related distancing measures ease-out. (27,28) This is coupled with the increased acceptance of BLPs among HPE learners and their willingness to continue utilizing BLPs beyond the context of the pandemic. (27,29)

Though BLPs are valuable, relatively well-accepted, and are being increasingly adopted, these educational interventions must be evaluated routinely in their entirety to ensure that they are effective and systematically improved. (1,30,31) This is especially necessary as new knowledge is developed in the teaching and learning domain, and innovative technologies become more readily available for use in educational systems. However, the lack of a common lexicon of evaluative terminology, frameworks, and methods to evaluate BLPs in HPE has been noted as a major threat to the comparability, generalizability, and overall systematicity of evaluation for BLPs, particularly in HPE. (32,33) In the general field of education, a diversity of frameworks and models to evaluate BLPs exist – the vast majority of which, however, are focused on evaluating the technology involved in BLPs, and not necessarily the entirety of the program. (34) Additionally, scholars highlight that BLP evaluations are often unique across programs – but consistently unique evaluations of similar programs within and across institutions may hinder the rigorous comparison of the potential effects of BLPs on learners. (31,34) Within HPE specifically, a lack of incorporation of consistent evaluative terms and frameworks was seen through a 2021 scoping review which included 80 studies from across 25 countries. (33) Interestingly, the vast majority of studies (86%) utilized questionnaires to evaluate their programs. (33) However, these questionnaires were developed to measure specific concepts such as “communication” or “learning” in general, and not specifically within the context of BLPs. Moreover, no questionnaire was identified that specifically evaluated the usability of BLPs within HPE. (33)

Several authors have suggested that usability is an instrumental pillar for BLP evaluation. (35,36) Usability, as discussed by the International Organization for Standardization (ISO), is a multidimensional concept that measures the “extent to which a system, product or service can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use”. (37) Across the field of education, usability has traditionally been viewed as “ease-of-use” of a technology and has been implemented in the evaluation of e-learning modalities. (33,38-40) However, this limited understanding and application of usability does not adequately reflect the construct’s multifaceted nature and depth. (33)

Developing the BLUE-Q

In this context, we conceived a research program with three consecutive phases to rigorously construct an instrument to evaluate usability of BLPs in HPE. Phase 1 consisted of a scoping review which aimed at understanding how usability has been conceptualized and evaluated for in HPE. (32,33) Phase 1 results highlight that usability has been implicitly utilized across all evaluation studies and that explicit application of this construct can facilitate the adoption of a shared lexicon for BLP evaluations. (33) Through a deductive analysis, we developed a conceptual map of usability and its application in BLPs within HPE that: (1) depicts the three major pillars of usability (i.e., effectiveness, efficiency, and satisfaction); (2) includes two newly identified concepts that are often used interchangeably with usability (i.e., accessibility and user experience); and (3) lists 22 sub-concepts that exist across HPE BLP evaluations. (33) This conceptual map provides an understanding of potential items that can be included in a questionnaire to evaluate usability in BLPs within HPE.

Our purpose in Phase 2, reported here, was to further deepen the previously developed HPE-focused conceptual framework for usability by exploring the perspectives of learning program evaluation experts, with the ultimate goal of developing a new questionnaire to systematize usability evaluations in BLPs across HPE. This approach to evolving conceptual frameworks generated from scoping reviews adheres to recommendations made by methodologists for ensuring that results from such knowledge syntheses are refined through consultation with relevant stakeholders. (41)

Methods

Ethics

Ethics approval to conduct this study was received from the McGill University Faculty of Medicine and Health Sciences' Institutional Review Board (Study Number: A06-E42-18A). All participants reviewed and signed consent forms prior to their interview.

Research Question and Methodology

The question that guided this research endeavor was: how do experts conceptualize usability and perceive its application in evaluating BLPs within HPE? To answer this question, we adopted a qualitative descriptive methodology. (42)

Participant Selection

Adopting a purposeful sampling approach, (42,43) participants were international experts in usability and learning program evaluation. Usability evaluation in the context of BLPs, particularly in HPE, is an emerging domain of inquiry. Thus, few experts exist in this area. However, a greater number of experts can be found that assess usability for other learning pedagogies such as e-learning and Massive Open Online Courses. Therefore, to identify experts, authors with Google Scholar profiles were primarily searched. Several reasons for this exist: (1) Google Scholar allowed for easy searching with regards to author interests (i.e. authors that have interests in ‘usability’ AND ‘blended learning’ can be efficiently identified); (2) Google Scholar allowed for easy reference to author publications and author influence (i.e., h-index and i10-index scores) which assisted in identifying their expertise in the current context; and lastly, (3) Google Scholar provided an indication of author primary affiliations, which ultimately allowed for easier contact with potential experts.

Maximum variation may be advantageous when utilizing purposeful sampling to identify experts.(43) As such, experts were identified from across different continents and levels of expertise. Google Scholar searches consisted primarily of key words such as [(usability OR “human centred-design”) AND (“blended learning” OR e-learning OR “online learning” OR “hybrid learning” OR “flipped classroom”) AND “program evaluation”]. The first author compiled a list of potential scholars to contact based on their geographic location and number of their relevant publications. This list included a total of 14 experts, of which two were early-career investigators, six were mid-career experts, and six were senior-level experts. The list was reviewed and consolidated with co-authors. Based on discussions with the team 9 experts were contacted and 7 agreed to be interviewed.

Data Collection and Analysis

An interview guide (see supplementary materials) was developed initially by the first author and was then refined by the co-authors in subsequent research meetings. The first author conducted individual interviews via Zoom videoconferencing software. Interviews lasted approximately 30 minutes on average. The first author transcribed verbatim the audio and video recordings with the aid of two assistants. All transcriptions were imported into QSR’s NVIVO 12. Semantic thematic analysis, guided by Braun and Clarke’s framework, (44) was conducted independently by the first and fourth authors. A hybrid approach to analysis was taken whereby codes were: (a) initially grouped based on a previously developed usability evaluation framework for BLPs in HPE, (33) and (b) inductively developed after each interview. The two reviewers met at three times during the coding process: after coding one transcribed interview, after coding four, and after coding all seven transcribed interviews. Initial themes were generated from the discussions between these two researchers, and were then revised and validated through meetings with the entire research team. Thematic data saturation “relates to the degree to which new data repeat what was expressed in previous data”. (45) No new themes were identified beyond the fourth interview. Thus, thematic data saturation was indeed reached with seven participants and no more participants were recruited beyond this sample.

Results

The experts who participated in this study originated from and worked in Australia, Brazil, England, Greece, Indonesia, Italy, and the United States. Two were women and five were men. Their professional roles included: an Associate Dean, a Full Professor, an Associate Professor, two Academic Faculty Members, an Industry Lead, and a PhD Student. Through thematic analysis, three themes were identified: the first focusing on consolidating the framework of usability and the latter two focusing on the actual process of evaluating usability. Refer to tables 1-3 to see illustrative excerpts from interviewees for each sub-theme within the three themes.

Theme 1: Consolidation of the multifaceted ISO definition of usability in BLPs within HPE

In defining usability and its application in BLP evaluations within HPE, four of the seven experts explicitly referred to the ISO definition. Using their experiences, each expert also provided a nuanced understanding of the specific facets of usability.

| Table 1: Theme 1 – Consolidation of the multifaceted ISO definition of usability in BLPs within HPE | Sub-Theme | Illustrative Quotations from Interviews | |

| Usability (General) |

“Well, I’m going to repeat my beloved definition of ISO standard which means that usability uhh is the extent that uhh people, certain people, can use certain products, systems, applications, platforms, etc. with specific goals in a specific context, with effectiveness, efficiency, and satisfaction are the three most basic usability dimensions… Well the one that I have already said before, it is an old definition, but uhh, there is no definition on usability by ISO. There is a newer definition on UX on user experience, but user experience is a larger concept. So when we are talking about usability, I think, if I remember well, nothing has changed I mean regarding the definition of usability, the basic pillars of usability are these three…It’s just that we have to start from the basics, that’s why I’m always referring to the basic three pillars – effectiveness, efficiency, and satisfaction… intrinsic motivation to learn is another important usability dimension, not just instructional design.” – Participant 2 “Well, usability is how the user can perform the tasks with effectiveness and efficiency, during a real time, for example. How well the platform supports users to perform tasks and accomplish these tasks. Right. So, usability is really well related to easiness of use, how well the platform is easy to use in simple terms… Well usually I use the definition of ISO, I think it is one of the most used definitions and it covers well the main points of usability I think… I use the latest definition of ISO because it involves also the emotions and experiences that are being considered user experience.” – Participant 4 “Usability is not such a short concept because it en-encompasses – uh – different aspects. For example – uh – you know, the classical definition is efficiency, effectiveness and satisfaction, and we can say that – uh – actually, these are the most important, the most important issues, the, the student must not spend more effort in using the platform or the blended – uh – system than learning the content. This is the, the golden rule of any educational system. So the system must be easy enough to be used not to distract the, the student from the study. Uh – and so, in this case, efficiency and effectiveness are quite related. Of course, satisfaction. But, even the user, the, the overall experience – uh – of the user is important because notwithstanding – uh – the good features, it is important to take the student involved, even emotionally involved and – uh – interested, and this may go beyond usability for a learning system. A, a, a, a, a usable system could be boring, let’s say, while for a learning system, engagement is, is of paramount importance. So, in, in, in the case of a learning system, I would add engagement to the typical – uh – items.” – Participant 5 |

||

| Accessibility and Organization of the Learning System |

“If you are using a flip chart and people can’t read your writing and so people can’t use it. Using handouts with small fonts or videos that are flickering too much are also, in my institution, and I expect your institution, at the moment we got a whole raft of directives recently about making uhm all our teaching materials highly accessible – things like particular fonts that you’re using; and not using italics because if you’ve got dyslexia it’s difficult to read; about background – using things you’re not using like certain colours. So all those need to be thought through because what happens if you’ve got red-green colour blindness and you’ve gotta traffic light system and it’s not very explicit. So having just colour icons is not a good idea. And you need -microsoft has got whole pages of this now so all those are – the problem is with technology, if you’re using technology e-learning whatever you wanna call it, soon as you start using technology what it does is foregrounds and heightens all these things because you have to think about it.” – Participant 1 “…if you, if you’re talking about the basics of usability for learning environments, you want to make it so that how you design it does not impede learning… So, that blended learning environment, sometimes we take things for granted because we might be in face-to-face and be like ‘Oh they can figure it out,’ but we have to be much more intentional about how we organize information, the assignments and instructions.” – Participant 3 “Usability and also accessibility; accessibility that means usability also for users with special needs – uh – with special needs and also with different technical skills. This is somehow important, especially important for learning systems because – uh – even students, learners with the most special technical skills, should should not left, should not be left behind. Because in some contexts – uh – the distance, distance part of learning is – uh – to get more insights into topics. And so it is – uh – necessary to assure these to, to everybody.” – Participant 5 |

||

| Effectiveness and Ease-of-Use |

“Was it useful? Have you learned anything new? Have you not learned anything new? Is at those levels. But actually, what you would like to know is whether they’ve made a change, a sustained change in the way they think things, and have that transformation, transformation of self, transformation of others, and transformation particularly clinically.” – Participant 1 “So making the platform easier would make the learning process more effective for example. Well if they need to understand how to use the platform they will, they will be distracted I think , yeah. Trying to learn it before learning the content, right.” – Participant 4 “So, usability should be something that, I suppose, if something is usable then, the user doesn't really need to think too much about how to use it. They could just use it. Um, if you think about modern interfaces like maybe Facebook, for example, there are not too many people that are not able to use Facebook. Well, at least in its earlier days when it was more simple to use um..” – Participant 7” |

||

| Efficiency |

“…people spend a lot of time developing resources. As soon as you decide to go down the route of using technology, you are committing time, money, a whole range of things to that resource.” – Participant 1 “…this part of learning to use the platform may have a learning curve right. At the beginning they will have some difficulties, but after they will use it more smoothly without thinking too much about how to use the platform to perform the tasks, right. But if we could minimize this learning curve, it will be better so we can learn faster and not bother with the platform.” – Participant 4 “… If you think about usability in the sense of a HCI human computer interaction style research or more set of ironed out theories of course it's important. We know that usability costs us a lot when it's not done right. Um, in educational space it would cost the students, it would costs students the ability to learn easily, um, its costs frustration. So, you know, and from an academic or teacher’s point of view, if we have to deal with systems that are not really usable it costs them time and again frustration that they probably don’t have.” – Participant 7 |

||

| Satisfaction and Learner Motivation |

“…intrinsic motivation to learn should be the fourth pillar when we measure usability of e-learning environments to courses, applications. Because I think that motivation to learn is probably the most important parameter for a qualitative type of you know learning uhh situation. I mean even if it is traditional education, or e-learning, or something else. I mean any kind of education, or any kind of educational product, or any kind of learning module must have the you know, quality to provoke users in a very good manner and I mean to make them intrinsically motivated to learn … when we say intrinsic motivation, we refer to things that, you know, they are not as, I mean which would exclude uhh tangible rewards. I mean, I’m not intrinsically motivated to learn when someone says “hey [removed name to retain anonymity], if you are going to succeed with this e-learning course you are going to get paid $1,000.” No. It’s obvious that this is not intrinsic motivation. Intrinsic motivation comes from inside. When we are pleased with things, when we are deeply satisfied we tend to become intrinsically motivated to keep doing what we are doing, in this case to keep learning and keep using and interacting with an e-learning environment. Not because we are told to do this, not because someone has promised a good payment when we do it, but because we really really like it and we think this is valuable for us. Valuable, this is very important word which is directly related to intrinsic motivation to learn. When we are intrinsically motivated to learn, we strongly believe that this is a valuable thing to us. Valuable, once again, not in terms of financial terms or tangible rewards – no no.” Participant 2 “Does it provide a positive experience or a negative experience?” – Participant 4 “…the classical definition is efficiency, effectiveness and satisfaction, and we can say that – uh – actually, these are the most important, the most important issues” – Participant 5 |

||

| User Experience |

“User experience also covers another types of aspects, such as emotions, feelings. Not just satisfaction, overall satisfaction of the user. So how user felt when using the platform? Did he feel anxious? Tired? Or another type of feeling that he or she may feel during the interaction? So it is not just – Well I’m fine with the platform or I’m satisfied it – but also the emotions.” – Participant 4 “Uhh I have a belief that the more useable the product, it yield to a good user experience. So, if the usability focus more on the product, the UX or the user experience focus more the on experience of the user. So it means that we need to build a good product, high usability of the product to, so that, in that way we expect that the user will have a better experience in using it.” – Participant 6 “It is often difficult to measure those kind of things I think um. The role of things like participant evaluation sheets, in my opinion, should be borderline their happy sheets, they use their experience um measures their not measures of learning um, you know people can have a good experience at something and not learn something so, we have to be mindful of using these kind of things in evaluation programs” – Participant 7 |

||

| Pedagogical Usability |

“The design of content which means how we design the learning material or you know how the instructional design has been transferred and you know packaged into an e-learning course.” – Participant 2 “Okay, of course the content must be as – um – must be complete, must be reliable, must be – uh – well understandable and provide many examples, many practical cases – uh – and also open-ended exercises because I, I, I do not trust much – uh – what is usually exploited, that is the multiple answer questions.” – Participant 5 “Should be able also to follow personal paths. For example, some students may already have some previous acquired skills, so maybe they could – some, some students could avoid a linear path because maybe they have already acquired, in some way – uh – skills that are required to follow-up.” – Participant 5 |

||

Accessibility and Organization of the Learning System

In the scoping review, accessibility was identified as synonymous to usability and was comprised of two sub-concepts: ease of access and access across time and space. (33) The experts nuanced this finding by indicating that accessibility is a sub-component of usability and that in BLPs it must address the fact that some learners may experience technological limitations (i.e., low internet bandwidth), may have special needs (i.e., visual impairments), or may have low technological literacy (i.e., possibly elderly learners). As such, when designing a BLP, experts highlighted the critical importance of understanding the learner population, specifically their challenges and needs, in order to optimize the educational program. One expert explained:

… all our teaching materials [must be] highly accessible – things like particular fonts that you’re using; not using italics because if you’ve got dyslexia, it’s difficult to read ... So all those need to be thought through because … as you start using technology, what it does is foregrounds and heightens all these things … (Participant #1).

Beyond recognizing issues of accessibility, experts indicated that system organization is a critical feature that must be planned in advance to ensure that accessibility needs are met appropriately. System organization refers to the clear, logical, and easy-to-navigate aspects of the content and learning systems, with a particular focus on e-learning environments (i.e., learning management systems).

Effectiveness and Ease-of-Use

Ease-of-use is a critical concept discussed by all experts. In fact, one of the three experts that did not refer to the ISO framework based their definition of usability strictly on ease-of-use:

For me, usability is ease-of-use of a product. How easy is that product to use. So, it’s a bit like [when I go to] one of our local coffee shops … [they’ve] got some little coffee cups … [and the handle is] really difficult to hold between your finger and your thumb. And you think ‘it’s got no usability’. Therefore, is it useful? No, it’s not useful (Participant #1).

Multiple experts indicated that the reason why ease-of-use is such a critical concept in the context of BLPs within HPE is because:

…there are studies that point out that if a platform is not useable enough, students will take more time to try to understand how the platform works instead of learning the content that is being provided by this platform (Participant #4).

Though ease-of-use was frequently discussed by the experts, this concept was almost always directly related to effectiveness. For example, one expert mentioned, “… making the platform easier would make the learning process more effective” (Participant #4). Interestingly, whereas the scoping review we conducted provides 10 specific sub-concepts that fall under the usability facet of effectiveness, the experts centered their definition of effectiveness around two specific sub-concepts: (1) gaining knowledge and (2) gaining skills.

Efficiency

In the scoping review, efficiency was found to be comprised of four sub-concepts: time management; engagement with program content, materials, and faculty; cost-benefit analysis; and initial labour investment by faculty versus long-term results. (33) The experts provided nuance to this understanding of efficiency, with a focus on the first two sub-concepts. With respect to time management, the experts discussed time in relation to flexibility in learning, as well as the effort required to learn the material and navigate the learning platform. With respect to engagement, the experts discussed the importance of reflecting on and evaluating the resources available to learners (e.g., one-on-one time with instructors, self-paced modules, etc.).

Satisfaction and Learner Motivation

Satisfaction was discussed similarly by the experts to the way it was analyzed in the scoping review. Satisfaction was often described as positive or negative perceptions of the content, and of the synchronous and asynchronous aspects of BLPs. However, an interesting addition to this description, highlighted by one expert was ‘motivation to learn’ as a major indicator of satisfaction and overall usability of the BLP. The expert explained:

… I proposed in my [previous] work that intrinsic motivation to learn should be the fourth pillar when we measure usability … because I think that motivation to learn is probably the most important parameter for a qualitative type of, you know, learning situation ... (Participant #2).

When asked to provide more detail on what is meant by intrinsic motivation, the expert explained that:

… when we say intrinsic motivation, we refer to things that … exclude tangible rewards. … Intrinsic motivation comes from inside. When we are pleased with things, when we are deeply satisfied, we tend to become intrinsically motivated to keep doing what we are doing, in this case to keep learning ... Not because we are told to do this, not because someone has promised a good payment when we do it, but because we really really like it and we think this is valuable for us (Participant #2).

To note, though other experts did not explicitly refer to intrinsic motivation, they did hint at the overall idea of being motivated to take part in a BLP through describing the value or purpose of the learning environment, as well as learner expectations with the program at hand.

User Experience

In the scoping review, user experience was identified as a synonymous term to usability and was found to focus on the perspectives of learners, faculty, and staff (i.e., teaching assistants), and how these perspectives changed over time. (33) The experts nuanced this finding by suggesting that user experience is a sub-component of usability and that it deals with the emotions and feelings of learners. For example, one expert explains that:

User experience also covers other types of aspects, such as emotions, feelings. Not just satisfaction, overall satisfaction, of the user. So how user felt when using the platform? Did he feel anxious? Tired? Or another type of feeling that he or she may feel during the interaction? (Participant #4).

Importantly, another expert explained that although user experience is an important measurement to gauge usability, it is still only one facet of this multidimensional construct and must be considered in tangent with the other facets to truly apprehend the overall impact of BLPs. The expert explained:

… experience measures [are] not measures of learning. You know, people can have a good experience at something and not learn something, so we have to be mindful of using these kinds of things in evaluation programs. Just like any good, you know, research process, you should triangulate your sources of data … forming your opinion on as to whether the program is successful or not (Participant #7).

Pedagogical Usability

The experts suggested a differentiation between the usability of the learning environment (i.e., asynchronous and synchronous learning modalities) and the content being taught. Two experts referred to this idea as ‘pedagogical usability.’ One expert explained:

I would distinguish the container from the content … the possibility to organize content, the possibility to set-up both synchronous and asynchronous sessions, the possibility to link different sections of the material according to special information needs ... (Participant #5).

To evaluate pedagogical usability (i.e., the usability of the content of BLPs and its delivery), experts highlighted several factors to consider: relevance of the content to learners, its reliability (i.e., how accurate and true the content appears), if the content is understandable, adherence of the educator to the syllabi, possibility for learners to choose personal paths, options to engage learners with different learning preferences, and how well the BLP is delivered by the instructor(s).

Theme 2: Different facets of usability can be used to assess different aspects of BLPs

| Table 2: Theme 2 - Different facets of usability can be used to assess different aspects of BLPs | Sub-Theme | Illustrative Quotations from Interviews | |

| Evaluating the Overall Learning Program |

“You know the the bigger perspective of evaluation is quite tough. It has, it includes many things. It is multi-level. You have to evaluate always the content, the methodology, the training staff – the professors or the trainers, their ability– that’s very very important. I mean their ability to perform in e-learning situations because you know it’s not easy. I mean you can, there are many people and colleagues out there that are very good and and and experts in their field, but sometimes they are not so good in e-learning courses.” – Participant 2 “Ok! Well the evaluation is very complex, right? So, the main point is that it is difficult to evaluate the platform by separating the content that is provided by professor and the platform itself. So students, when you ask students to evaluate the platform, the learning management system for example, they usually evaluate the content that is provided by the platform, rather than the platform itself. So, most of the times, they, they, for example, if the professor does not provide a content that is interesting for them, or is not well explained, then the students are more likely to evaluate the platform more negatively when actually the platform is not so bad at all. So, it is a difficult point to evaluate this type of platform… So, yeah they evaluate for example, whether their content is well – is updated. They evaluate some aspects that try to understand how well the platform supports learning to learn remotely, right.” – Participant 4 “I would distinguish the container from the content. So, evaluating the container – uh – is somehow ‘generical’ to any kind of topic, so that’s usability, the possibility to organize content, the possibility to set-up both synchronous and asynchronous sessions, the possibility to link different sections of the material according to special information needs. And this is about the container and these are general requirements, notwithstanding the kind of topic….” – Participant 5 |

||

| Focusing Evaluations on the Asynchronous & Technological Features |

“Well, usability is very important when we are talking about the digital aspects. I mean uhh how can we transfer usability into a face-to-face learning situation? I’m not sure about that. I think it is clear to me that we have to be clear that usability is about the design of digital things. I mean, okay, there are several economic factors and human factors that we have to take into account when we design other kinds of things, but I want to be clear in order, you know, to frame the situation, when we are talking about usability, we are talking about the digital design, the design of digital uhh systems. Not forms. So usability is very important in the design of blended learning regarding the aspect of how we design and deliver the e-learning aspect of the blended learning thing. Okay?” – Participant 2 “I mean, e-learning poses several difficulties, it has several specific you know obstacles, that you have to overcome, it’s not so easy to do. So you have to evaluate the infrastructure, the content, the instructional design, the ability of trainers and instructors, when it comes to e-learning you have to evaluate the design and usability of e-learning application, or the usability of the learning management system tool that maybe used in this kind of situation. Uhm what else? I think that these are the most important ones.” – Participant 2 |

||

| Focusing Evaluations on the Asynchronous & Technological Features |

“Well, usability is very important when we are talking about the digital aspects. I mean uhh how can we transfer usability into a face-to-face learning situation? I’m not sure about that. I think it is clear to me that we have to be clear that usability is about the design of digital things. I mean, okay, there are several economic factors and human factors that we have to take into account when we design other kinds of things, but I want to be clear in order, you know, to frame the situation, when we are talking about usability, we are talking about the digital design, the design of digital uhh systems. Not forms. So usability is very important in the design of blended learning regarding the aspect of how we design and deliver the e-learning aspect of the blended learning thing. Okay?” – Participant 2 “I mean, e-learning poses several difficulties, it has several specific you know obstacles, that you have to overcome, it’s not so easy to do. So you have to evaluate the infrastructure, the content, the instructional design, the ability of trainers and instructors, when it comes to e-learning you have to evaluate the design and usability of e-learning application, or the usability of the learning management system tool that maybe used in this kind of situation. Uhm what else? I think that these are the most important ones.” – Participant 2 |

||

| Focusing Evaluations on the Synchronous & Traditional Face-to-Face Features |

“So, that blended learning environment, sometimes we take things for granted because we might be in face-to-face and be like “Oh they can figure it out,” but we have to be much more intentional about how we organize information, the assignments and instructions. So it’s very important.” – Participant 3 “So it’s not just the learning management system, it could be the content or in-person content, so I think that can be the product.” – Participant 6 |

||

| Focusing Evaluations on the Content and Materials (Pedagogical Usability) |

“But you know in both cases, I mean face-to-face and e-learning contexts, the key, the king, is always the content. I mean, we have to provide a very good content to the learners even if it is face-to-face or e-learning. Uhh I, I know this is not something knew, we are already aware of that, but we should stress this kind of parameter, I suppose” – Participant 2 “So, so, there’s two levels to that evaluation too, like, if you’re looking at the summative evaluation, you’re actually looking at what did they learn or what they’re able to achieve based on the course. But if you’re looking at more of the formative evaluation, which looks at the pedagogical usability, so then you know that that is good and does not impact how they learn. It’s two different levels. Or two different time frames when you do that.” – Participant 3 “Something that is – uh – in the middle between the container and the content is the possibility to provide the same content in different forms according to different learning styles – uh – because, for example, some students prefer to have all the material in one chunk, other students prefer to have sections, some students prefer to have the assessment at the end, other students prefer to have assessment in the middle – uh – and so, this is both related to the kind of content, the way the content is organized, but also to the possibility provided by the platform.” – Participant 5 |

||

| Evaluating the Overall Learning Program | |||

Moving beyond definitions, experts explained how usability could and should be evaluated in the context of BLPs. A primary recommendation here was that the content being taught in BLPs, the synchronous learning components, and the asynchronous learnings environment and activities should all be evaluated separately.

It was noted that the first five facets of usability as defined by the experts (i.e., accessibility and organization, effectiveness and ease-of-use, efficiency, satisfaction and learner motivation, and user experience) are essential in the evaluation of the asynchronous and synchronous learning environments in particular. Whereas for the content being taught in the BLP, though the first five facets of usability are important, the sixth facet (i.e., pedagogical usability) is the most critical.

Theme 3: Quantitative and qualitative data is needed to assess the multifaceted nature of usability

| Table 3: Theme 3 – Quantitative and qualitative data is needed to assess the multifaceted nature of usability | Sub-Theme | Illustrative Quotations from Interviews | |

| Questionnaires |

“Questionnaire as well, it is always a method which is valid.” – Participant 2 “Oh right right, just one thing, well, uh, I think that one of the problems of the questionnaires that are already seen in the literature is that they are very quantitative for this field. And I think that the, obtaining, gathering, qualitative data would be much more interesting. Well, in our last study, we obtained some better insights when trying to understand, trying to extract information from the qualitative data obtained provided by the students in the questionnaires, through the open-ended questions for example. Right so combining quantitative and qualitative data will be better for evaluating these types of platforms, not just using questionnaire with Likert scales for example.” – Participant 4 “If you think about what a student experience survey is, it’s a usability survey, it’s a user experience survey and that necessarily means that there has to be some form of meeting of minds of the teacher and the students in terms of how the learning is structured how the teaching is done um” – Participant 7 |

||

| Pre-Post Testing / Evaluation over Time |

“So it’s a challenge, evaluating e-learning and it needs to be done, but overall, it’s understanding the process of e-learning which is about usability, and longer term, which is about transfer. How does it actually change practice? How does it change overtime? Because it might have stimulated people, I’ve got a PhD student … who’s looking at impact of health professional education training courses. One of them really really, I think one of the really important aspects that we need to think about very seriously is about how the people interact – who have they spoken to, what influences, as it stimulating them to read more, to do more, to look into it more, and they’re the types of questions, that we need, you know following what up after a course and then maybe 3 months later, following people, checking in has that course changed your ability to practice but also has that course stimulated to read more. Cause that’s really important information, because in fact there is no change in knowledge scores -because we did a control trial and saw that it didn’t really increase knowledge scores before and after – it didn’t increase knowledge, but what it did do is stimulate curiosity – and surely that is education.” – Participant 1 “but in order to go more, to go farther, and deeper, I would propose to you know to evaluate the participants after some months after the program has ended and, you know, try to observe them while they are doing the job. I mean, the best way and when it comes to medical education [laughs] for instance, the most important thing is, you know, not the conceptual uh kind of knowledge, but the experiential one. I mean a good doctor has to perform, not only to to to have the knowledge in his mind. [Laughs]. I mean, it is very important that that that every every doctor has to to to perform and interact very well with a patient and be able to apply his or her knowledge. It’s not that okay I got it, I can do perfectly fine in a test and that’s it. No no. We have to go beyond the basic and or imagine we are talking about surgeons, [laughs] we have to see them in specific operations. I mean, how they do it? This is so important! In order to evaluate if their training was effective one or good one. Uhh, well as I said, you know, evaluation in general has many many many levels.” – Participant 2 |

||

| Interviews |

“When it comes to the direction of an e-learning kind of thing, of the blended learning, uhh some methods would be usability testing, uhh user interviews – I mean you have to context some interviews with the learners. Uhh okay, we also use some kinds of questionnaires, questionnaires are always helpful, but uhh that would be in addition to the usability testing sessions. I mean first of all, usability testing sessions uhh which would be accompanied by usability interviews and questionnaires.” – Participant 2 “Uhh regarding the non-digital thing of the non-blended learning, okay aaah evaluation could be in the form – once again – interviews could be a very useful way – a speak with the participants and ask them face-to-face and ask them about the quality of the program they are participating in.” – Participant 2 “There is this idea of, I guess, a community of learners and those people who are able to get involved in the discussion um. If you think about it’s a usable situation.” – Participant 7 |

||

| Observations |

Uhh another kind of method would be to observe how people use this kind of e-learning applications, in a natural way, if it is possible… Uhh when I say to observe, I mean if it would be possible for the researcher to you know to spend some time with the learner or spend some days with the learner when they use the e-learning systems of the blended learning.” – Participant 2 “but in order to go more, to go farther, and deeper, I would propose to you know to evaluate the participants after some months after the program has ended and, you know, try to observe them while they are doing the job. I mean, the best way and when it comes to medical education for instance, the most important thing is, you know, not the conceptual uh kind of knowledge, but the experiential one. I mean a good doctor has to perform, not only to to to have the knowledge in his mind. I mean, it is very important that that that every every doctor has to to to perform and interact very well with a patient and be able to apply his or her knowledge. It’s not that okay I got it, I can do perfectly fine in a test and that’s it. No no. We have to go beyond the basic and or imagine we are talking about surgeons, we have to see them in specific operations. I mean, how they do it? This is so important! In order to evaluate if their training was effective one or good one.” – Participant 2 |

||

| Usability Testing |

“When it comes to the direction of an e-learning kind of thing, of the blended learning, uhh some methods would be usability testing, uhh user interviews – I mean you have to context some interviews with the learners. Uhh okay, we also use some kinds of questionnaires, questionnaires are always helpful, but uhh that would be in addition to the usability testing sessions. I mean first of all, usability testing sessions uhh which would be accompanied by usability interviews and questionnaires.” – Participant 2 “Um if we, if we talked about the technology side of it that, again it’s about thinking about how you present you materials and your activities and making sure that they are... I suppose you still have to go through some form of user testing regime to figure out whether what you're doing is understandable by others but um.” – Participant 7 “I’d probably go back to the old fashioned way of doing usability tests with a target audience. Um, I mean, It's often difficult to do it in the education sense because you might not be able to obtain access to the potential students in advance, but you can always get colleagues to look at things.” – Participant 7 |

||

| Triangulation of Methods |

“Well, I don’t know if I, I use one specific tool. I actually use different things related to instructional design paradigms that I know and it’s based on problem-based learning.” – Participant 3 “Yeah, so if I can revise what I suggested, I said that there probably you can use two, probably three, the generic one, and the e-learning usability scales, and then the program evaluation, the medical subjects.” – Participant 6 “…um just like any good, you know, research process you should triangulate your sources of data are about what forming your opinion on as to whether the program is successful or not.” – Participant 7 |

||

| Tools and Frameworks to Guide BLP Evaluations |

“…the latest Kirkpatrick evaluation model that’s out has many many more process questions in it…” – Participant 1 “Well there’s the technology acceptance model by Davis, and that talks about – there’s an intention to use something is dependent on the perception of usefulness and the perception of ease of use.” – Participant 1 “I suggest that we can use the usability instruments. So measuring the usability of the product in general, so because we have the generic usability instrumentation, for example the system usability scales – I think it’s a very well known instruments in this field, but I think we need to have additional instruments which focus more on the field, for example if we have engineering online course, I think we need to prepare a specific instrument to measure the engineering aspect. But if we measure the medical, I think we need to have additional instruments, so not only measuring the generic usability of the product, but also the medical education program itself. So I think we can use two type of instruments of measurements. So the instrument in generic – so it covers the technology aspect. But we also need to add the usability in terms of let’s say the blended learning usability. I just found that I think a researcher came up with the e-learning usability scale, so it’s not just system usability scales which can be used for any program, but I just found that there is e-learning usability scales which focus more on the e-learning software usability. And I think we also need to evaluate the educational program itself.” – Participant 6 |

||

The experts highlighted the need to adopt both closed and open-ended questions. Experts noted that multiple-choice questions can help program evaluators garner an overall perspective of the usability of BLPs, and that short answer questions can help better understand learners and their context. Additionally, pre-post testing was also recommended by some experts. Such evaluations shed light to changes in subjective perceptions or objective GPA scores.

When asked how the experts would evaluate usability in BLPs within HPE, each expert discussed different tools and frameworks. Their suggestions included: the E-learning Usability Scale, the Technology Acceptance Model, the System Usability Scale, and the Kirkpatrick Model. Furthermore, some experts discussed the importance of interviews, whereas others discussed the importance of observing the interaction of learners with learning management systems.

Towards the Blended Learning Usability Evaluation – Questionnaire (BLUE-Q)

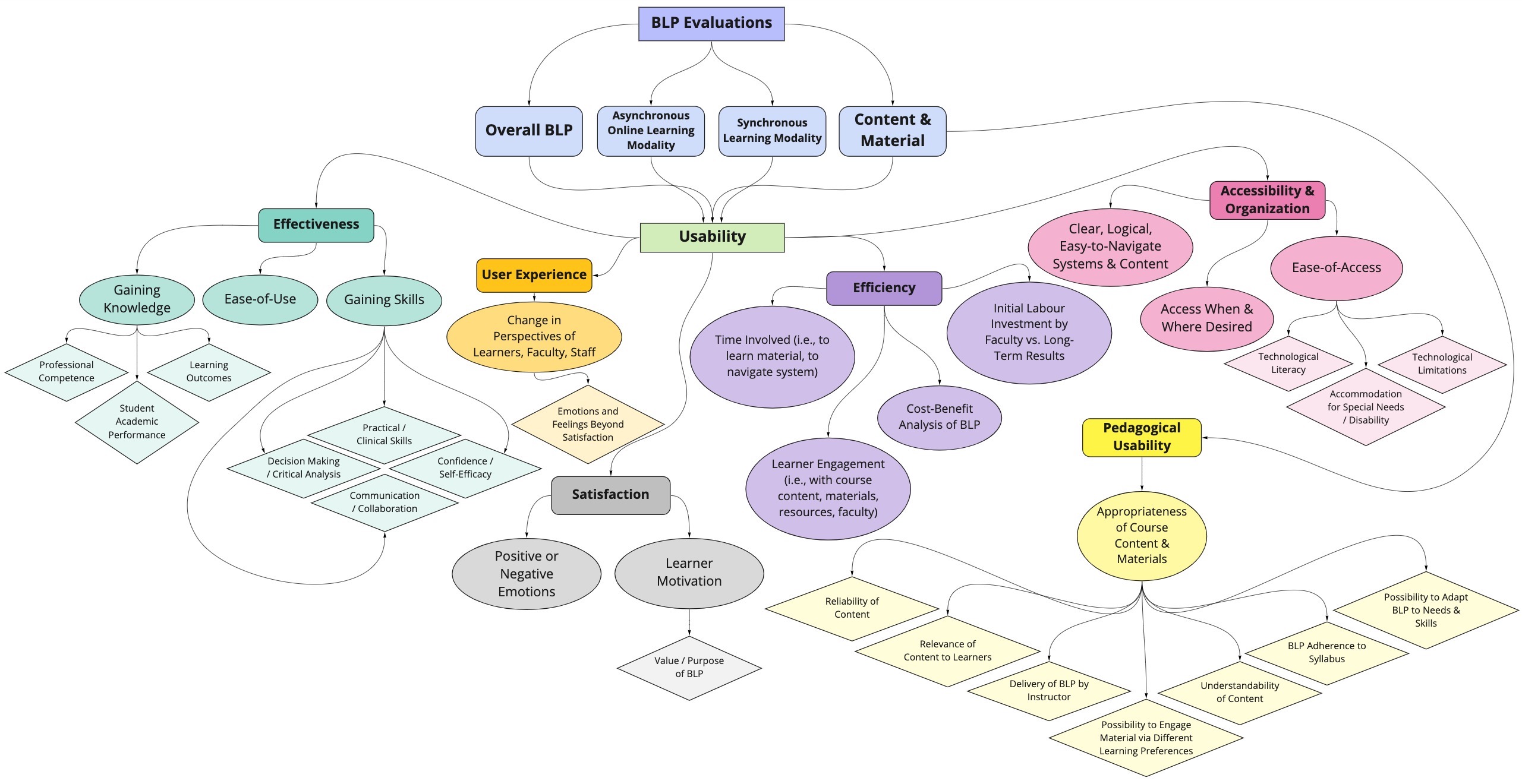

Using the learnings and recommendations generated from the three themes, we evolved our previously developed conceptual map stemming from our previously published scoping review to further elucidate the various elements for evaluating usability in BLPs within HPE (Figure 1).

The first theme helped us pair accessibility and organization in one sub-category, introduced pedagogical usability as a sub-concept for specifically evaluating the content and materials of BLPs, and indicated that user experience and accessibility/organization are sub-components of usability (illustrated via unidirectional arrows). The second theme helped us establish four main pathways by which BLP usability evaluations can take place (i.e., that BLP evaluations can focus on the different components of the program or can evaluate the program in its entirety). The third theme assisted in consolidating the sub-components of the various domains of usability (e.g., sub-components of effectiveness are now limited to gaining knowledge, gaining skills and ease-of-use). Moving forward, the evolved concept map can serve as an essential pillar in guiding usability evaluations across BLPs within and potentially even beyond the field of HPE.

Through these themes and the evolved concept map, an initial version of an instrument to evaluate BLPs in HPE was developed and called the BLUE-Q. Preliminary face validity was assessed through research team and stakeholder engagement meetings. This initial version of the instrument currently includes 55 Likert scale items and six open-ended questions. The preliminary version of the questionnaire is currently under validation.

Discussion

To strengthen the usability, generalizability, and rigour of BLPs within HPE, both a new framework and instrument are needed to guide evaluations. Through this study, a more nuanced understanding of usability and its applicability in BLP evaluations within HPE was generated via analysis of interview data from seven international experts – which in turn enabled (1) the evolution of a previously developed conceptual map depicting a framework for evaluating the usability of BLPs within HPE; and (2) the development of the initial version of the BLUE-Q.

Four critical additions are made to the usability framework we previously developed through our scoping review. (33) Firstly, the usability facet of accessibility was expanded to consider learner limitations with respect to using technology. Adding to this, experts also suggested that BLP developers and evaluators must consider the organization of the learning content and environment, concordant to accessibility. Secondly, the relationship between ease-of-use and effectiveness is clarified in this study. In the scoping review, ease-of-use was analyzed as synonymous to effectiveness. The experts, however, explain that ease-of-use leads to effectiveness of a BLP. Thirdly, learner motivation was deemed to be a critical aspect of usability and related directly to satisfaction. The experts explain that if learners do not feel motivated to learn the content or engage with the learning platform, the overall perception of usability would be negatively affected. Finally, pedagogical usability, was also added to group evaluations specific to the content and material being taught and utilized in the BLP.

It is important to note that, whereas the number of participants in this study might seem limited, they were experts recruited from around the world. Their similar conceptualizations of usability (data saturation) both validated and enhanced our framework for usability. Furthermore, each expert provided unique methods, frameworks, and tools to evaluate the usability of BLPs within HPE. This finding validates the fact that there is currently no unanimously agreed upon method or instrument to evaluate usability in BLPs within HPE, even among experts. See the supplementary materials for tables that present raw data to outline the themes.

In conclusion, thematic analysis of in-depth interviews from seven international experts in usability and program evaluation assisted in strengthening a framework for usability that we previously developed through a rigorous scoping review. Researchers can use this revised usability framework, depicted in Figure 1, to structure their HPE-based BLP evaluations. Additionally, this framework was used to develop a new instrument, coined the BLUE-Q, which will be useful in ascertaining the comparability, rigour, and systematic improvement of BLPs across the field of HPE – all of which are necessary to ensure that such programs are well designed, well received by learners, and genuinely facilitate learning. (46,47) Moving forward, the BLUE-Q’s psychometric properties will be evaluated using Bayesian factor analysis and seminal guidelines for content validation to ensure it is viable for use by scholars across the field of HPE. (48-50)

References

- Garrison DR, Kanuka H. Blended learning: Uncovering its transformative potential in higher education. The internet and higher education. 2004;7(2):95-105. DOI:10.1016/j.iheduc.2004.02.001

- Garrison DR, Vaughan ND. Blended learning in higher education: Framework, principles, and guidelines. John Wiley & Sons; 2008. DOI:10.1002/9781118269558

- Williams C. Learning on-line: A review of recent literature in a rapidly expanding field. Journal of further and Higher Education. 2002 Aug 1;26(3):263-72. https://doi.org/10.1080/03098770220149620

- Watson J. Blended learning: the convergence of online and face- to-face education. North American Council for Online Learning: Promising Practices in Online Learning; 2008. Retrieved from: https://files.eric.ed.gov/fulltext/ED509636.pdf

- Hrastinski S. What Do We Mean by Blended Learning? TechTrends. 2019; 63, 564–569. https://doi.org/10.1007/s11528-019-00375-5

- Abrosimova G, Kondrateva I, Voronina E, Plotnikova N. Blended Learning in University Education. Humanities & Social Science Reviews. 2019; 7(6), 6-10. https://doi.org/10.18510/hssr.2019.762

- Cronje J. Towards a new definition of blended learning. Electronic journal of e-Learning. 2020 Feb 1;18(2):pp114-121. DOI: 10.34190/EJEL.20.18.2.001

- Armellini A, Rodriguez BC. Active Blended Learning: Definition, Literature Review, and a Framework for Implementation. Cases on Active Blended Learning in Higher Education. 2021:1-22. DOI: 10.4018/978-1-7998-7856-8.ch001

- Leidl DM, Ritchie L, Moslemi N. Blended learning in undergraduate nursing education–A scoping review. Nurse Education Today. 2020 Mar 1;86:104318. DOI: 10.1016/j.nedt.2019.104318

- Bersin J. The blended learning book: Best practices, proven methodologies, and lessons learned. John Wiley & Sons; 2004 Sep 24.

- Graham CR, Woodfield W, Harrison JB. A framework for institutional adoption and implementation of blended learning in higher education. The internet and higher education. 2013 Jul 1;18:4-14. https://doi.org/10.1016/j.iheduc.2012.09.003

- Zainuddin Z, Haruna H, Li X, Zhang Y, Chu SK. A systematic review of flipped classroom empirical evidence from different fields: what are the gaps and future trends?. On the Horizon. 2019 Jun 3. DOI: 10.1108/OTH-09-2018-0027

- Awidi IT, Paynter M. The impact of a flipped classroom approach on student learning experience. Computers & Education. 2019 Jan 1;128:269-83. DOI: 10.1016/j.compedu.2018.09.013

- Rafiola R, Setyosari P, Radjah C, Ramli M. The Effect of Learning Motivation, Self-Efficacy, and Blended Learning on Students’ Achievement in The Industrial Revolution 4.0. International Journal of Emerging Technologies in Learning (iJET). 2020 Apr 24;15(8):71-82. DOI: 10.3991/ijet.v15i08.12525

- Vallée A, Blacher J, Cariou A, Sorbets E. Blended learning compared to traditional learning in medical education: systematic review and meta-analysis. Journal of medical Internet research. 2020;22(8):e16504. DOI: 10.2196/16504

- Arora A, Rice K, Adams A. Blended Learning as a Transformative Educational Approach for Qualitative Health Research. University of Toronto Journal of Public Health. 2022 Feb 25;3(1). https://doi.org/10.33137/utjph.v3i1.37639

- Oguguo BC, Nannim FA, Agah JJ, Ugwuanyi CS, Ene CU, Nzeadibe AC. Effect of learning management system on Student’s performance in educational measurement and evaluation. Education and Information Technologies. 2021 Mar;26(2):1471-83. https://doi.org/10.1007/s10639-020-10318-w

- Pereira JA, Pleguezuelos E, Merí A, Molina‐Ros A, Molina‐Tomás MC, Masdeu C. Effectiveness of using blended learning strategies for teaching and learning human anatomy. Medical education. 2007 Feb;41(2):189-95. DOI:10.1111/j.1365-2929.2006.02672.x

- Fernandes RA, de Oliveira Lima JT, da Silva BH, Sales MJ, de Orange FA. Development, implementation and evaluation of a management specialization course in oncology using blended learning. BMC medical education. 2020 Dec 1;20(1):37. https://doi.org/10.1186/s12909-020-1957-4

- Sáiz-Manzanares MC, Escolar-Llamazares MC, Arnaiz González Á. Effectiveness of blended learning in nursing education. International journal of environmental research and public health. 2020 Jan;17(5):1589. DOI: 10.3390/ijerph17051589

- Muresanu D, Buzoianu AD. Moving Forward with Medical Education in Times of a Pandemic: Universities in Romania Double Down on Virtual and Blended Learning. Journal of Medicine and Life. 2020 Oct;13(4):439. DOI: 10.25122/jml-2020-1008

- Theoret C, Ming X. Our education, our concerns: The impact on medical student education of COVID‐19. Medical education. 2020 Jul;54(7):591-2. DOI: 10.1111/medu.14181

- Torda AJ, Velan G, Perkovic V. The impact of the COVID-19 pandemic on medical education. Med J Aust. 2020 Aug 1;14(1). DOI: 10.5694/mja2.50705

- Steelcase. COVID-19 Accelerates Blended Learning; The recent crisis is showcasing the upside of a blended learning approach to education. N.D. Retrieved from: https://www.steelcase.com/research/articles/topics/education/covid-19-accelerates-blended-learning/

- Bordoloi R, Das P, Das K. Perception towards online/blended learning at the time of Covid-19 pandemic: an academic analytics in the Indian context. Asian Association of Open Universities Journal. 2021 Feb 16. DOI: 10.1108/AAOUJ-09-2020-0079

- Adel A, Dayan J. Towards an intelligent blended system of learning activities model for New Zealand institutions: an investigative approach. Humanities and Social Sciences Communications. 2021 Mar 16;8(1):1-4. https://doi.org/10.1057/s41599-020-00696-4

- Kim JW, Myung SJ, Yoon HB, Moon SH, Ryu H, Yim JJ. How medical education survives and evolves during COVID-19: Our experience and future direction. PloS one. 2020 Dec 18;15(12):e0243958. https://doi.org/10.1371/journal.pone.0243958

- Lala SG, George AZ, Wooldridge D, Wissing G, Naidoo S, Giovanelli A, et al. A blended learning and teaching model to improve bedside undergraduate paediatric clinical training during and beyond the COVID-19 pandemic. African Journal of Health Professions Education. 2021 Mar 1;13(1):18-22. https://doi.org/10.7196/AJHPE.2021.v13i1.1447

- Cheng SO, Liu A. Using online medical education beyond the COVID-19 pandemic–A commentary on “The coronavirus (COVID-19) pandemic: Adaptations in medical education “. International Journal of Surgery (London, England). 2020 Nov 16. DOI: 10.1016/j.ijsu.2020.05.022

- Metz AJ. Why conduct a program evaluation? Five reasons why evaluation can help an out-of-school time program. Research-to-Results Brief. Child TRENDS. 2007 Oct;4. Retrieved from: https://www.childtrends.org/wp-content/uploads/2013/04/child_trends-2007_10_01_rb_whyprogeval.pdf

- Cleveland-Innes M, Wilton D. CHAPTER 8: Evaluating Successful Blended Learning. Guide to Blended Learning . Athabasca University. 2018. Retrieved from: https://openbooks.col.org/blendedlearning/chapter/chapter-8-evaluating-successful-blended-learning/

- Arora A. Usability in blended learning programs within health professions education: A scoping review. Available from Dissertations & Theses at McGill University; ProQuest Dissertations & Theses Global; 2019. Retrieved from https://proxy.library.mcgill.ca/login?url=https://www.proquest.com/dissertations-theses/usability-blended-learning-programs-within-health/docview/2516891062/se-2

- Arora AK, Rodriguez C, Carver T, Teper MH, Rojas-Rozo L, Schuster T. Evaluating usability in blended learning programs within health professions education: a scoping review. Medical science educator. 2021 Jun;31(3):1213-46. Retrieved from: https://link.springer.com/article/10.1007/s40670-021-01295-x

- Bowyer J, Chambers L. Evaluating blended learning: Bringing the elements together. Research Matters: A Cambridge Assessment Publication. 2017;23:17-26. Retrieved from: https://www.cambridgeassessment.org.uk/Images/375446-evaluating-blended-learning-bringing-the-elements-together.pdf

- Singh H. Building effective blended learning programs. InChallenges and Opportunities for the Global Implementation of E-Learning Frameworks 2021 (pp. 15-23). IGI Global. DOI: 10.4018/978-1-7998-7607-6

- Mohammed Abdel-Haq E. The Blended Learning Model: Does It Work?. Sohag University International Journal of Educational Research. 2021 Jan 1;3(3):29-40. DOI: 10.21608/SUIJER.2021.122458

- International Organization for Standardization. ISO 9241-11:2018(en) Ergonomics of human system interaction — Part 11: Usability: Definitions and concepts. 2018. Retrieved from https://www.iso.org/obp/ui/#iso:std:iso:9241:-11:ed-2:v1:en

- Ifinedo P, Pyke J, Anwar A. Business undergraduates’ perceived use outcomes of Moodle in a blended learning environment: The roles of usability factors and external support. Telematics and Informatics. 2018 Apr 1;35(1):93-102. 10.1016/j.tele.2017.10.001

- Ventayen RJ, Estira KL, De Guzman MJ, Cabaluna CM, Espinosa NN. Usability evaluation of google classroom: Basis for the adaptation of gsuite e-learning platform. Asia Pacific Journal of Education, Arts and Sciences. 2018 Jan;5(1):47-51. Retrieved from: http://apjeas.apjmr.com/wp-content/uploads/2017/12/APJEAS-2018.5.1.05.pdf

- Nakamura WT, de Oliveira EH T, Conte T. Usability and user experience evaluation of learning management systems-a system- atic mapping study. In International Conference on Enterprise Information Systems. 2017;2:97–108. DOI: 10.5220/0006363100970108

- Arksey H, O'Malley L. Scoping studies: towards a methodological framework. International journal of social research methodology. 2005 Feb 1;8(1):19-32. https://doi.org/10.1080/1364557032000119616

- Green J, Thorogood N. Qualitative methods for health research. sage; 2018 Feb 26.

- Marshall MN. Sampling for qualitative research. Family practice. 1996 Jan 1;13(6):522-6. DOI: 10.1093/fampra/13.6.522

- Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3(2):77–101. https://doi.org/10.1191/1478088706qp063oa

- Saunders B, Sim J, Kingstone T, Baker S, Waterfield J, Bartlam B, Burroughs H, Jinks C. Saturation in qualitative research: exploring its conceptualization and operationalization. Quality & quantity. 2018 Jul;52(4):1893-907. https://doi.org/10.1007/s11135-017-0574-8

- Precel K, Eshet-Alkalai Y, Alberton Y. Pedagogical and design aspects of a blended learning course. International Review of Research in Open and Distributed Learning. 2009;10(2). DOI: https://doi.org/10.19173/irrodl.v10i2.618

- Sandars J. The importance of usability testing to allow e-learning to reach its potential for medical education. Education for Primary Care. 2010 Jan 1;21(1):6-8. DOI: 10.1080/14739879.2010.11493869

- Zhang H, Schuster T. Questionnaire instrument development in primary health care research: A plea for the use of Bayesian inference. Canadian Family Physician. 2018 Sep 1;64(9):699-700. Retrieved from: ncbi.nlm.nih.gov/pmc/articles/PMC6135135/pdf/0640699.pdf

- Zhang H, Schuster T. A methodological review protocol of the use of Bayesian factor analysis in primary care research. Systematic Reviews. 2021 Dec;10(1):1-5. https://doi.org/10.1186/s13643-020-01565-6

- Haynes SN, Richard D, Kubany ES. Content validity in psychological assessment: A functional approach to concepts and methods. Psychological assessment. 1995 Sep;7(3):238. https://doi.org/10.1037/1040-3590.7.3.238

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.